How To Fix The DirectX Rasterisation Rules

DirectX has always rasterised to render target pixel centres, and has always looked up textures from texel edges. Because of this, it is more difficult than it should be to write a flexible shader system where various portions of your pipeline are image-based. It is much simpler to work in a unified system where you write to render target pixel edges, and this article details a simple way of fixing this.

I've been motivated to write this because there seems to be little accurate documentation on this topic. The interested reader can compare this text to the DirectX SDK documentation here.

How Stuff Should Work (In An Ideal World)

Ideally, when you wish to use graphics hardware to process one texture into another, you should not have to care about how many texels your source and destination image have, you should be able to deal with the whole thing in viewport and texture coordinates.

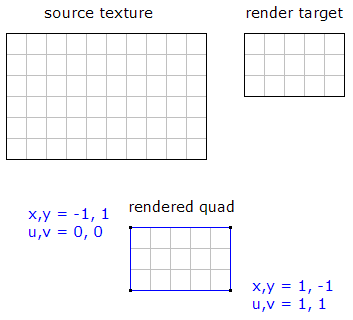

For example, to downsample one texture into another we would like to render single viewport-space quad as in the following diagram:

The above coordinates are independant of texture or render target resolution, or any fancy multi-sampling modes you may have enabled on the rasteriser. And as you can see the quad does a pixel-perfect resample of the source texture with nice simple numbers like -1, 0 and 1.

How DirectX Does It

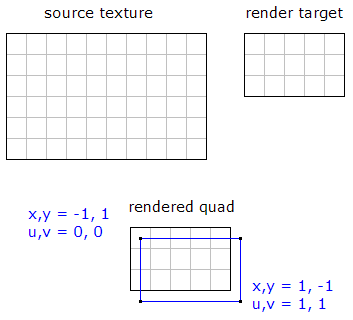

Since DirectX actually renders to pixel centres, what you get by default is the following:

Ow my eyes. The source image has been effectively shifted towards the lower-right corner of the render target. This artifact is so common that you often see it in DirectX games and demos. Drivers are also sometimes guilty of not accounting for this during StretchRect.

How To Fix DirectX Once (And Then Stop Worrying About It)

What we really want to do is make DirectX behave like our ideal model, and do so in a way where we implement something once, then forget about it. If you deal with all of your shaders in a high-level language such as HLSL, this is easy to do.

At the end of each vertex shader we need to adjust the screenspace position by half a viewport texel in each direction. If we always reserve constant register c0 to store this offset, then the first half of our solution would be to upload this constant every time the viewport changes. The following C++ code fragment is an example:

// this code is called whenever our viewport or render target changes

float texelOffset[] = {-1.0f/viewportWidth, 1.0f/viewportHeight, 0.0f, 0.0f};

device->SetVertexShaderConstantF(0, texelOffset, 1);

The second half of the solution is to ensure that each vertex shader passes the final screenspace position through a function that applies this offset in register c0. The following HLSL code is an example:

// this constant and function live in a global HLSL include

uniform float2 texel_offset : register(c0);

float4 final_position(float4 screen_pos)

{

float4 result = screen_pos;

result.xy += texel_offset*result.ww;

return result;

}

// simple screenspace-corrected vertex shader example

void simple_resample_vs(in float4 in_position : POSITION0,

out float4 out_position : POSITION0,

out float2 out_texcoord : TEXCOORD0)

{

out_position = final_position(in_position);

out_texcoord = float2(0.5f, -0.5f)*in_position.xy + 0.5f.xx;

}

Conclusion

The key to getting this solution to work is remembering to pass the final screenspace position through the final_position function for all shaders in your project. This way you can assume that all texture reads and writes occur on texel edges, vastly simplifying algorithms that replace scene geometry with rendered images, and removing all texture size dependent constant uploads from post-processing shaders.

Although this article is very simple I hope you find the idea helpful. I have employed this technique on multiple platforms in both work and hobby code so can vouch for the correctness of the technique.