Hybrid Bidirectional Path Tracing

I'd like to share my results from converting a CPU-only bidirectional path tracer into a CPU/GPU hybrid (CPU used for shading and sampling, GPU used for ray intersections). These results are a bit old... I posted them a while ago as a thread on ompf. I found out later that this thread had been cited in Combinatorial Bidirectional Path-Tracing for Efficient Hybrid CPU/GPU Rendering, so let me summarise it here.

Bidirectional path tracing is usually implemented with a nested loop over the vertices of an eye and light sub-path, with inline calls to traverse the scene for closest hit rays and shadow rays. To get large numbers of rays in flight, I changed this into a state machine that yields when it needs to fire a ray, and sometime later once the intersection results are available, restores its state to continue shading/sampling. I then implemented a basic job system that spreads 64K of these state machines over available CPU cores in small groups. This allows me to build up 64K ray requests with all the shading and sampling done on the CPU.

The GPU side traces 64K rays through the scene, returning 64K intersection results. For simplicity, I am using Optix (1.x at the time) for the traversal. The whole system is then double buffered, allowing me to build up one batch of rays split over N CPUs while the previous batch is being traced by the GPU.

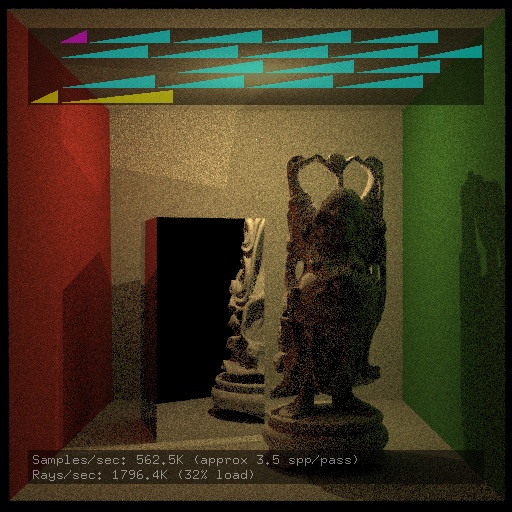

Here's a shot of it after a few seconds (hence the noise) with the profiler up:

The top 4 cyan rows are a Core 2 Quad (Q6600 @2.4GHz). The lower yellow row is a GTX275. The single magenta job is accumulating samples to avoid sample collisions during shading.

Some observations:

- My CPU is the bottleneck by a long way.

- Optix spinlocks a CPU while the GPU is busy. I assume this is by design: CUDA lets you choose min latency spinlocking vs high latency OS event in recent releases, it looks like Optix always uses the former whereas I'd prefer the latter here.

- The first yellow bar is memcpy, moving rays and intersection results over PCIe. I'd like to try using page-locked host memory to avoid this, but it's not exposed in Optix.

The authors of Combinatorial Bidirectional Path-Tracing for Efficient Hybrid CPU/GPU Rendering also noticed this CPU bottleneck, so they increased the relative load on the GPU by using correlated sampling of a small set of light sub-paths. This is an interesting performance/coverage trade-off that they then discuss how to optimise for.

Here is a 1024x1024 render (click for full size) of the same scene after 10 or so minutes to clear up most of the noise:

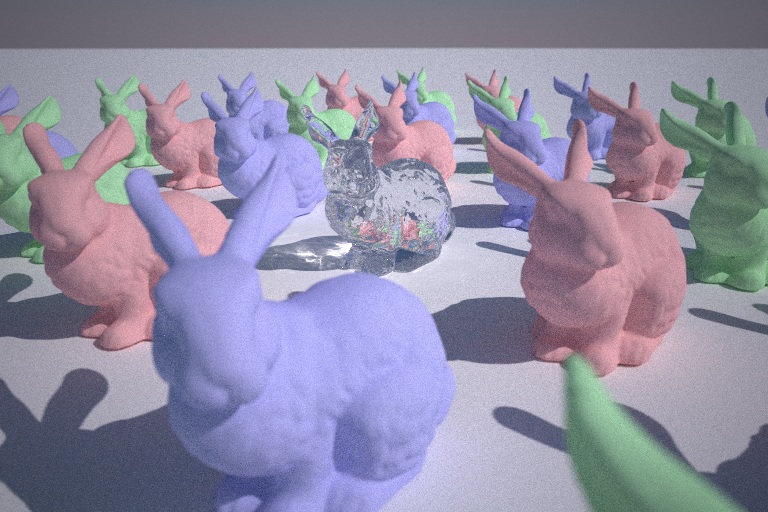

And another piece of serious coder art, using a thin lens camera model and and experimenting with unbiased methods to guide light sub-paths towards refractive objects to get caustic paths in larger outdoor scenes:

While an interesting side project, I don't think I'll continue with this approach. I think if you're considering splitting work between CPU and GPU, I'd advise splitting in a way that allows dynamic load balancing, such as using OpenCL on both devices and splitting up the framebuffer. For me, I'm going to continue to focus on rendering primarily using the GPU.