Multiple Importance

At work I wrote a global illumination system from scratch. It used classical ray tracing for the direct lighting, and [photon mapping](http://en.wikipedia.org/wiki/Photon_mapping">photon mapping) with final gather for the indirect term. I use the past tense since we've now switched over to using lightcuts as the main renderer, which due to the work of an awesome colleague, is giving us better results (and faster).

To complete the set, I thought I'd have a go at implementing a bidirectional path tracer, a full Veach, if you will...

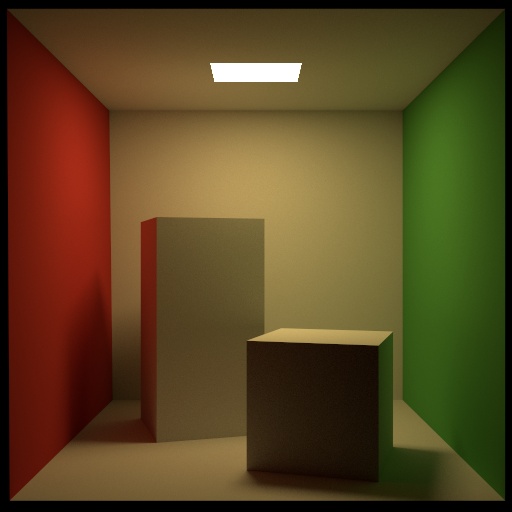

The above image is from this little bidirectional path tracing experiment, using around 500 samples per pixel. I'm actually sick of staring at that damned box! I've been staring at it for years! This image shows a couple of interesting things:

- Don't do all of your anti-aliasing in high dynamic range (see the light edge)

- Even in low dynamic range, a box filter doesn't look that nice (see short box top edges)

If you want nicely anti-aliased results from HDR imagery, you probably want to render it two or three times as large as you need, then tone map it down to LDR, and then finally down-sample it to your target resolution. To generate the HDR pixels without so much aliasing in the first place, then something other than a box filter is required. If you're interesting in this sort of thing then the freely available Chapter 7 from PBRT will start you off. In fact I recommend reading the whole book, it's very good.

Anyhow, what I wanted to post about was the awesome variance reduction power of multiple importance sampling. As covered in great detail in Eric Veach's thesis, the basic idea is that you want to assign your path a weight, and this weight is computed by considering all possible ways that you could have generated your path, not just the one that you did use. An intuitive way to think about this is: consider the case that you generate a path, and for the way that you generated it (e.g. randomly hitting a light source) it seems fairly unlikely. If you consider this path purely in the way that you generated it, you end up with a very strong sample in your image. If by considering all other ways you could have generated the path you find that it is in fact very likely by other means (e.g. by connecting light and eye sub-paths), then this reduces the weight of your sample, which in turn reduces variance. By a lot. Here's an example:

The amazing thing is that these images were generated using the exact same set of paths (just 4 paths per pixel), but then either summed with uniform weights or with weights computed using multiple importance sampling. The bright spots in the first image are mostly from eye sub-paths that randomly hit the light source. Multiple importance sampling assigns these paths a very low weight, which brings their sample value much closer to the expected value for the pixel, hence variance is much reduced. As Veach mentions, this processing is also basically free: you already know the visibility term between each vertex of your path, you just need to consider the probabilities for all the ways you could have constructed it.

There are lots of other awesome things you can learn from the Veach thesis, and since the work will be 12 years old this year, you probably should know this stuff by now, right?